My 2 first articles about external-dns & certificate management in a Kubernetes cluster were prerequisites for this article, which was my first idea for an article. So in this one, I'm presenting the dynamic environment feature provided by GitLab (available in all tiers). I find this feature helpful when used in a review process to provide a testing playground on a deployed application, and not only to stay at the code level. To follow this article, there are 3 prerequisites:

Follow my external-dns article to set up the DNS discovery process

Follow, optionally, my certificate management one to secure access to components

Have some basic knowledge regarding

gitlab-cimodel,kubernetes&helm

First, let's present what is an environment in gitlab-ci: an environment is described at a job level in your CI description. GitLab will link the information regarding the job execution context (commit, timestamp, ...) to the environment so that you can easily know what is deployed on this one.

For this article, I created a very simple application composed of a single static HTML page and dockerize it. Sources can be found in the article repository on gitlab.com. Let's put this in a gitlab-ci.yml:

stages:

- 🐳

# Build image and store it in GitLab registry

📦:

stage: 🐳

image:

name: gcr.io/kaniko-project/executor:v1.9.2-debug

entrypoint: [""]

# Using kaniko to be able building image without docker daemon : https://github.com/GoogleContainerTools/kaniko

script:

- /kaniko/executor

--context "${CI_PROJECT_DIR}"

--dockerfile "${CI_PROJECT_DIR}/Dockerfile"

--destination "${CI_REGISTRY_IMAGE}:${CI_COMMIT_BRANCH}"

rules:

# Only build image on main branch

- if: $CI_COMMIT_BRANCH == "main"

Now, let's deploy it through gitlab-ci mechanism and link it to a static environment, main.mydns.fr. I've packaged this with helm to easily variabilize. But we need to interact with a k8s cluster to do so. There're 2 options for this in gitlab-ci: use a variable with the KUBECONFIG content or use the kubernetes-agent provided by GitLab. In this article, I choose to use the agent option as it's more integrated within GitLab. Thus, I deployed the agent with the classic configuration documented.

helm upgrade --install demo gitlab/gitlab-agent \

--namespace gitlab-agent-demo \

--create-namespace \

--set image.tag=v16.0.0-rc1 \

--set config.token=my_token \

--set config.kasAddress=wss://kas.gitlab.com

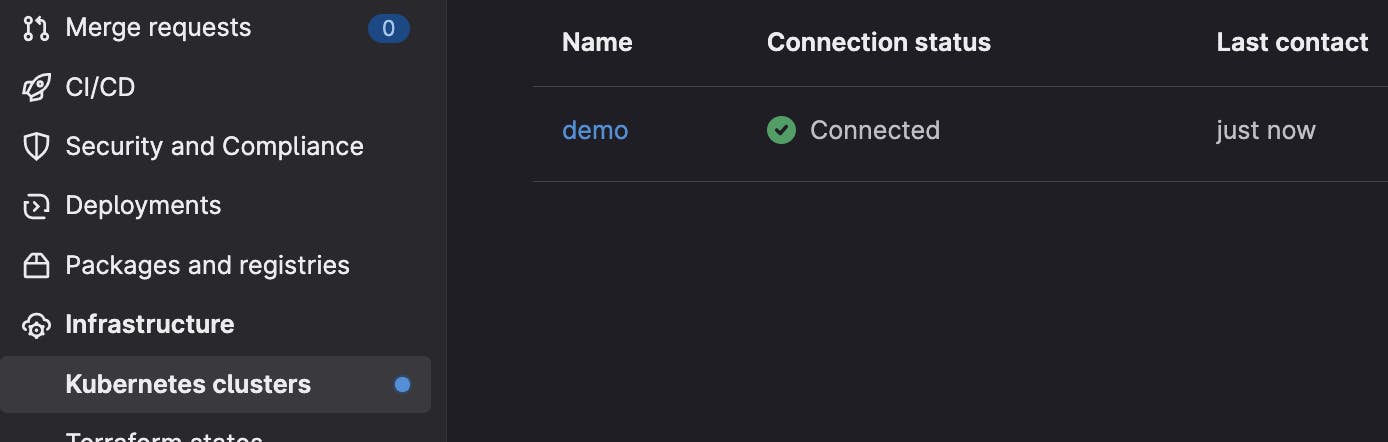

In my GitLab project, I can see that my cluster is connected and synchronized

So, to do a quick intermediate summary, I have an image stored in a registry, a Kubernetes cluster connected to my GitLab and a helm chart defined to deploy a sample static HTML page.

Now, I define a new job in my pipeline to deploy my application into my cluster

☸️:

image:

name: dtzar/helm-kubectl

stage: 🚀

script:

# Use kubernetes agent context

- kubectl config use-context fun_with/fun-with-gitlab/dynamic-environments:demo

# Install/upgrade my helm chart

- helm upgrade ${CI_COMMIT_BRANCH}-env ./helm/ --set dockerconfig=${DOCKER_CONFIG} --install --create-namespace -n ${CI_PROJECT_NAME}-${CI_COMMIT_BRANCH}

# Define environment link to this job

environment:

name: main

url : https://main.mydns.fr

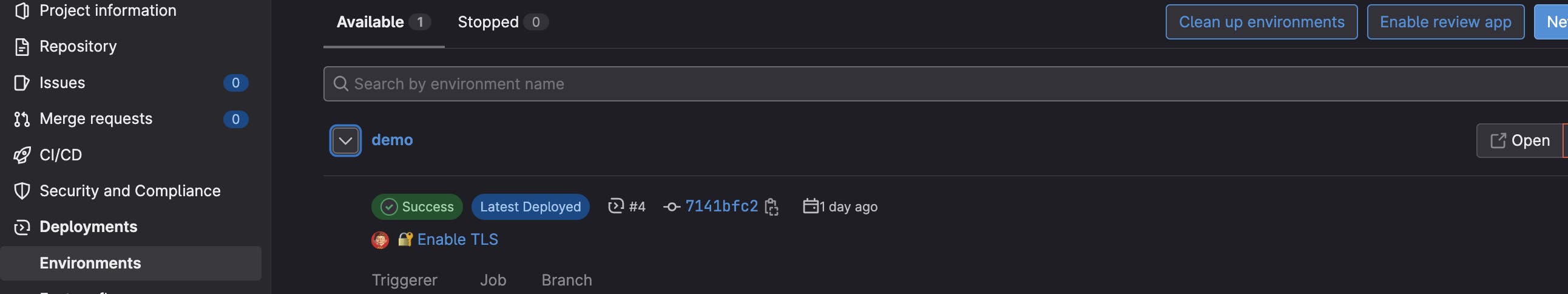

Once my job is completed successfully, I can see in the Environment menu a new environment available for which I have the information regarding when it was updated, based on which commit and a link to open the defined URL in another tab

Clicking on Open, I can see my webpage

This is an interesting but very static approach as we have a static URL link to a specific job. If I ran the same job on different branches, the deployment will be replaced each time, so it would be complicated to easily know from which sources the environment is based. It would be much more relevant to be able to provide an environment per branch or per merge request to have up-to-date elements deployed to be easily reviewed. Let's improve our current job to make it more dynamic. I create a dedicated branch dynamic-env to set up the configuration

☸️:

image:

name: dtzar/helm-kubectl

stage: 🚀

script:

|

# Use kubernetes agent context

kubectl config use-context fun_with/fun-with-gitlab/dynamic-environments:demo

# Install/upgrade my helm chart

helm upgrade ${CI_COMMIT_REF_NAME}-env ./helm/ \

--set dockerconfig=${DOCKER_CONFIG} \

--set pod.version=${CI_COMMIT_REF_NAME} \

--set env=${CI_COMMIT_REF_NAME} \

--install --create-namespace \

-n ${CI_PROJECT_NAME}-${CI_ENVIRONMENT_SLUG} \

--recreate-pods

environment:

name: $CI_COMMIT_REF_NAME

url: https://$CI_COMMIT_REF_NAME.mydns.fr

rules:

# Only build image on main branch

- if: $CI_COMMIT_BRANCH == "main"

# and on merge requests

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

In this job, I add some variables for helm deployment

pod.version=${CI_COMMIT_REF_NAME}: define the tag of the container image to deploy

env=${CI_COMMIT_REF_NAME}: define the name of the DNS entry on which ingress is listening

environment information is based on the same ${CI_COMMIT_REF_NAME}

Once the pipeline is done, a new environment is available in the environment listing

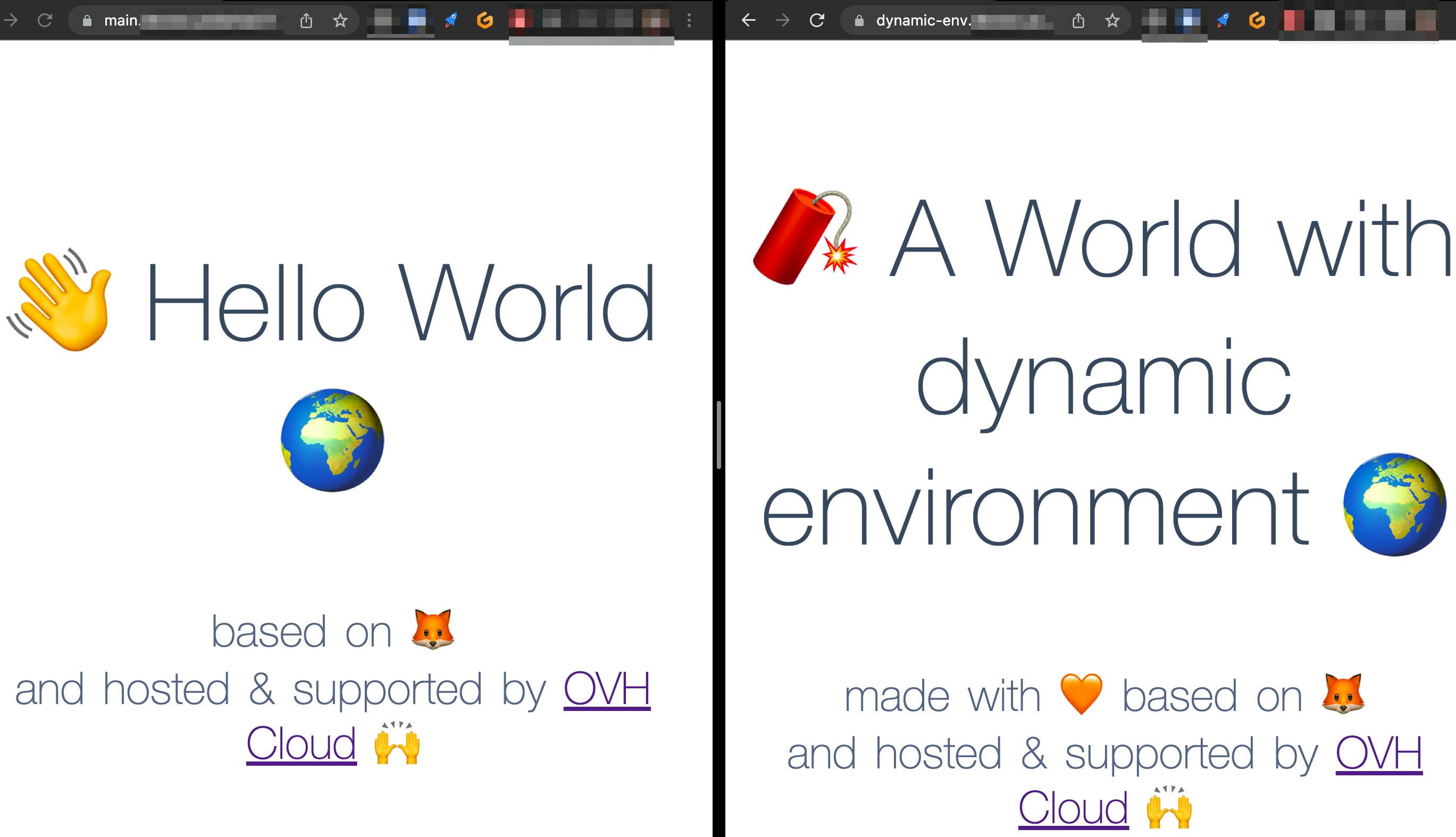

Let's do a quick modification in my HTML to be sure that the environment on the branch is different from the one on main branch and launch a pipeline. Once the pipeline is done, I have 2 environments with 2 versions of my application

It works 💥 but it would be interesting to clean them. First, I add a SleepInfo in my chart to automatically stop the deployment at night. This is explained in my article presenting kube-green.

apiVersion: kube-green.com/v1alpha1

kind: SleepInfo

metadata:

name: sleep-{{ .Values.env }}

spec:

weekdays: "*"

sleepAt: "22:30"

wakeUpAt: "07:30"

timeZone: "Europe/Paris"

But it could be interesting to automatically delete the environment & resources associated when the merge request is closed. To do so, I adapt the previous job to set a on_stop action

environment:

name: $CI_COMMIT_REF_NAME

url: https://$CI_COMMIT_REF_NAME.devoxx.yodamad.fr

on_stop: ✋_stop

And I add a new job that is triggered when a merge request is closed or started manually. This job calls the helm delete command to remove all resources

✋_stop:

image:

name: dtzar/helm-kubectl

stage: 🚀

script:

|

kubectl config use-context fun_with/fun-with-gitlab/dynamic-environments:demo

# Delete helm chart

helm delete ${CI_COMMIT_REF_NAME}-env -n ${CI_PROJECT_NAME}-${CI_ENVIRONMENT_SLUG}

# Delete associated namespace

kubectl delete namespace ${CI_PROJECT_NAME}-${CI_ENVIRONMENT_SLUG}

environment:

name: $CI_COMMIT_REF_NAME

action: stop

rules:

- if: $CI_MERGE_REQUEST_ID

when: manual

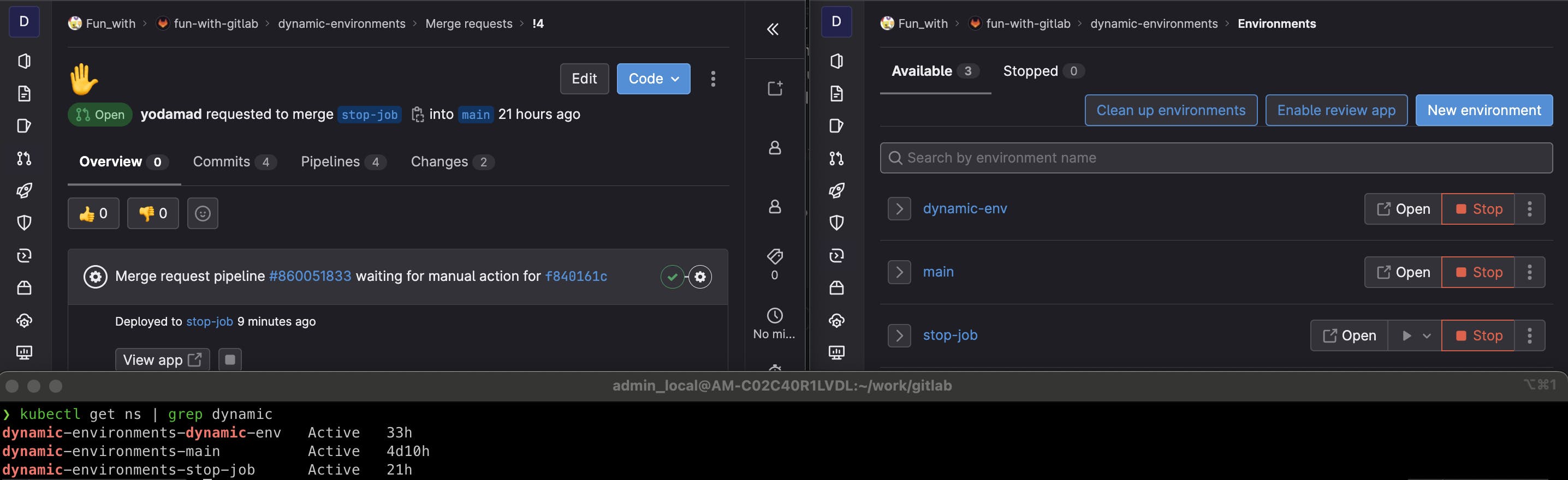

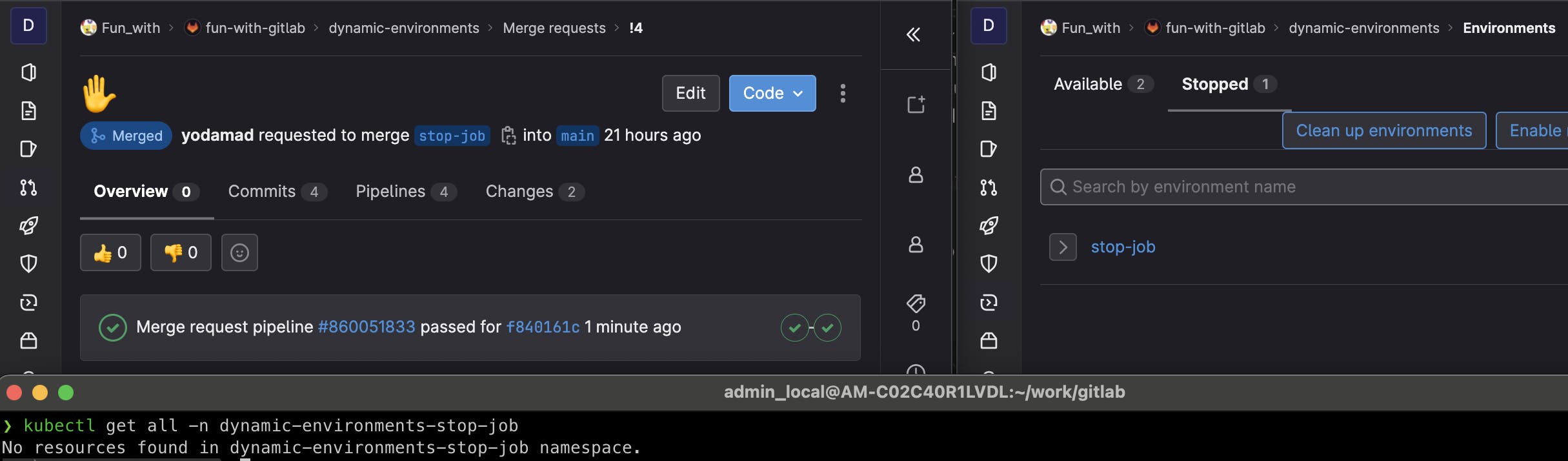

I create a dedicated branch stop-job to illustrate. Until the MR is open, the associated environment is up and visible in the environment. On my cluster, I have a namespace dynamic-environments-stop-job

If I merged it, the ✋_stop job is triggered, and once done, the environment is marked as Stopped and there are no longer resources in my cluster

Finally, in this article, we can see how to have a dynamic environment for each merge request created to easily enhance the review process with visual access to the application deployed. This can be useful also to add some automated tests for UI, performance, security & accessibility for instance.

🙏 Thanks again OVHcloud for sponsoring to host my demos in their Kubernetes-managed service. 👨🎨 Thank you Philippe Charrière for the review & the awesome CSS sheet to magnify my sample.

Sources can be found in the article repository on gitlab.com