In this article, I'm going to explain how to set up external-dns module in a Kubernetes cluster. This module is useful to generate dynamic URLs when deploying applications in a cluster. It detects components when they are deployed into the cluster and automatically creates the entries in a DNS so that it can be easily accessible through a comprehensible way (not just an IP address). Also, this is part of my journey to learn about Kubernetes ecosystem.

So, it started with a simple tweet when I decided to work on my Kubernetes skills...

Then exoscale contacted me to support my journey. And here I'm presenting my first accomplishments regarding #kubernetes and #external-dns. To reproduce the following step, there are a few prerequisites:

kubectlCLI (HowTo)A Kubernetes cluster (locally or managed)

A

NetworkPolicyprovider installed in the cluster (HowTo, here we'll use Calico preconfigured in a managed cluster)

So, first, I've created a cluster on a SaaS platform, here I've done it on portal.exoscale.com as it's really easy and fast, then connect to it with kubectl

Before installing and configuring external-dns, I need to install an ingress-controller to manage my ingresses in the cluster. I choose a classic nginx-ingress-controller that I deploy with the following configuration (but as it's a very long file, follow the link to retrieve it).

Now I can deploy my ingress-controller

kubectl apply -f deploy-ingress-control.yaml

Output should look like this:

Resources are created in a dedicated namespace called ingress-nginx

kubectl get pods -n ingress-nginx

Now, we need to handle the DNS configuration side. First, you need to have a domain name that you buy from any good provider. In my case, I use IONOS 🇫🇷, but if you are using a hyperscaler like Microsoft or Google, you should probably host everything in the same platform (domain name, managed cluster ...).

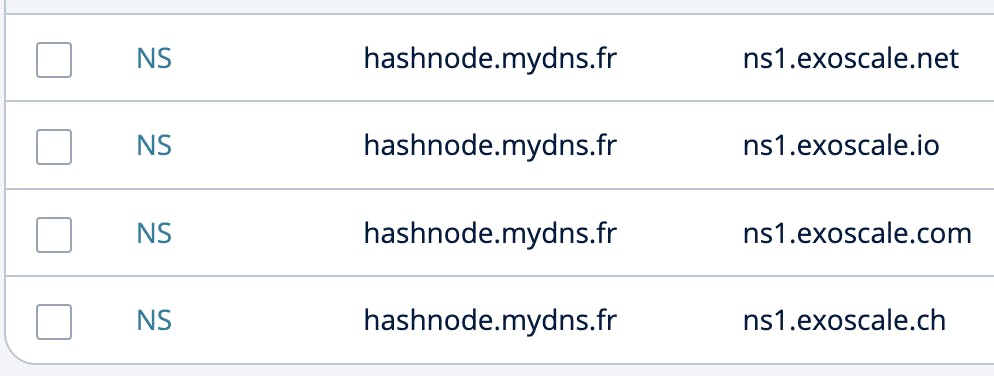

Once you have your domain name, you can either use it entirely for your cluster or dedicate only a subdomain. In my case, I already use my main domain for other projects, so I use a subdomain, in this example hashnode.mydns.fr. In both cases, you have to configure the DNS to tell him to redirect requests regarding this subdomain to your dns service provider (exoDNS service in my case), and on the other side, in exoDNS, I tell him to handle this subdomain. This is done with the NS pattern of DNS. I've used exoDNS service because Ionos is not supported by external-dns for now, which complicates a little the architecture but helps understand what is happening.

ExoDNS configuration

Based on ExoDNS information, Ionos configuration

Now that our DNS is correctly configured, we can start installing external-dns. Before doing it, to make things as clean as possible (with my 101 level with Kubernetes), I create a dedicated namespace for the elements I'll install, and I set up my kubectl to use this new namespace

kubectl create namespace dns-management

kubectl config set-context --current --namespace=dns-management

Now we can deploy external-dns with the following configuration. You have to set up several elements:

provider: the dns provider you rely on, here

exoscale, but external-dns supports many of themsource: type of component to monitor. Here I choose

ingressbut it can be alsoservicefor instancepolicy: the kind of synchronization you want to do with the DNS. I choose

syncsoexternal-dnscreates AND deletes entries. You can choose not to, others are available.domain-filter: the domain or subdomain to manage. In my example,

hashnode.mydns.frspecific configuration keys according to your DNS provider. Here 3 are necessary for exoscale :

exoscale-endpoint,exoscale-apikey,exoscale-apisecret

apiVersion: apps/v1

kind: Deployment

metadata:

name: external-dns

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: external-dns

template:

metadata:

labels:

app: external-dns

spec:

# Only use if you're also using RBAC

serviceAccountName: external-dns

containers:

- name: external-dns

image: registry.k8s.io/external-dns/external-dns:v0.13.4

args:

- --source=ingress

- --provider=exoscale

- --domain-filter=hashnode.mydns.fr

- --policy=sync

- --txt-owner-id=exoscale-external-dns

- --exoscale-endpoint=https://api.exoscale.ch/dns

- --exoscale-apikey=<secured_apikey>

- --exoscale-apisecret=<very_secured_apisecret>

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: external-dns

namespace: dns-management

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: external-dns

rules:

- apiGroups: [""]

resources: ["services","endpoints","pods"]

verbs: ["get","watch","list"]

- apiGroups: ["extensions","networking.k8s.io"]

resources: ["ingresses"]

verbs: ["get","watch","list"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: external-dns-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: external-dns

subjects:

- kind: ServiceAccount

name: external-dns

namespace: dns-management

Beware, the namespace, if not default, has to be defined in ClusterRoleBinding and in ServiceAccount.

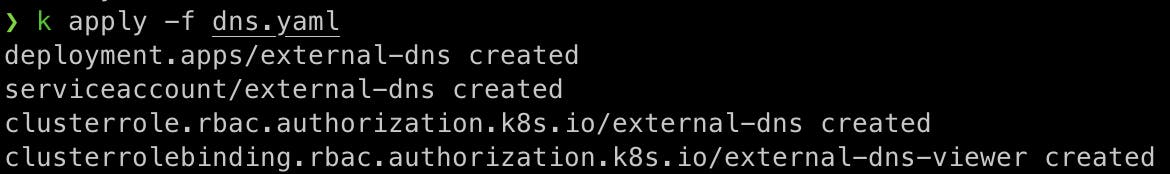

kubectl apply -f external-dns.yaml

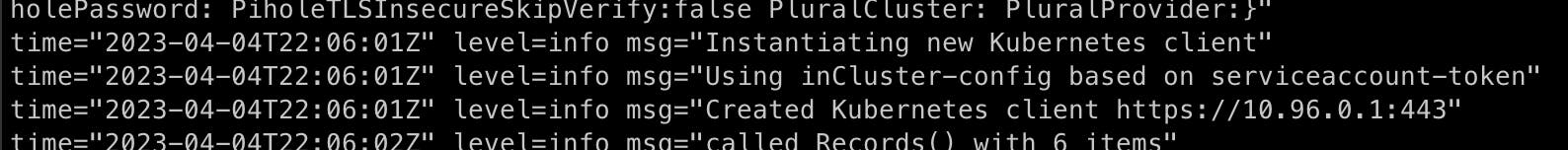

Now, we can check in the logs of the external-dns pod that everything is ok:

$ kubectl get pods -n dns-management

NAME READY STATUS RESTARTS AGE

external-dns-688cf888c-6nvbx 1/1 Running 0 10h

$ kubectl logs -f external-dns-688cf888c-6nvbx

In the logs, you should see

If you see the error failed to sync v1.Ingress: context deadline exceeded, it is because you have missed setting up the right namespace in external-dns yaml file.

Now let's check if external-dns is working well. I deploy a very simple application based on nginx

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hashnode

annotations:

kubernetes.io/ingress.class: nginx

spec:

rules:

- host: demo.hashnode.mydns.fr

http:

paths:

- backend:

service:

name: hashnode

port:

number: 80

pathType: Prefix

path: /

---

apiVersion: v1

kind: Service

metadata:

name: hashnode

spec:

ports:

- port: 80

targetPort: 80

selector:

app: hashnode

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: hashnode

spec:

selector:

matchLabels:

app: hashnode

template:

metadata:

labels:

app: hashnode

spec:

containers:

- image: nginxdemos/hello

name: hashnode

ports:

- containerPort: 80

In a dedicated namespace, I deploy this application

kubectl create namespace hashnode

kubectl apply -f hashnode-demo.yaml -n hashnode

kubectl get pods -n hashnode # 1 pod in Running state

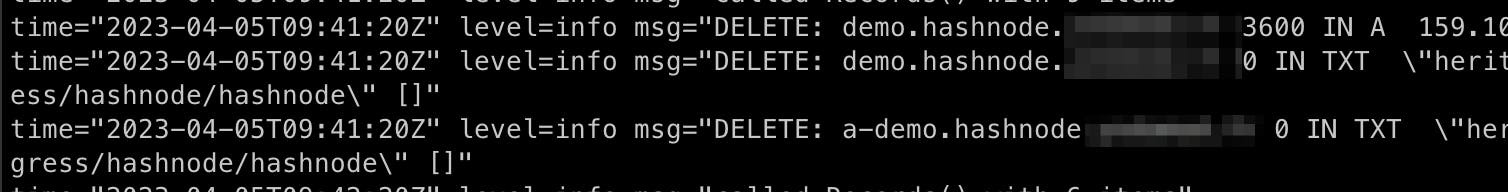

kubectl logs -f external-dns-688cf888c-6nvbx -n dns-management

In the logs of external-dns pod, after a few seconds, you see that 3 entries are created into DNS:

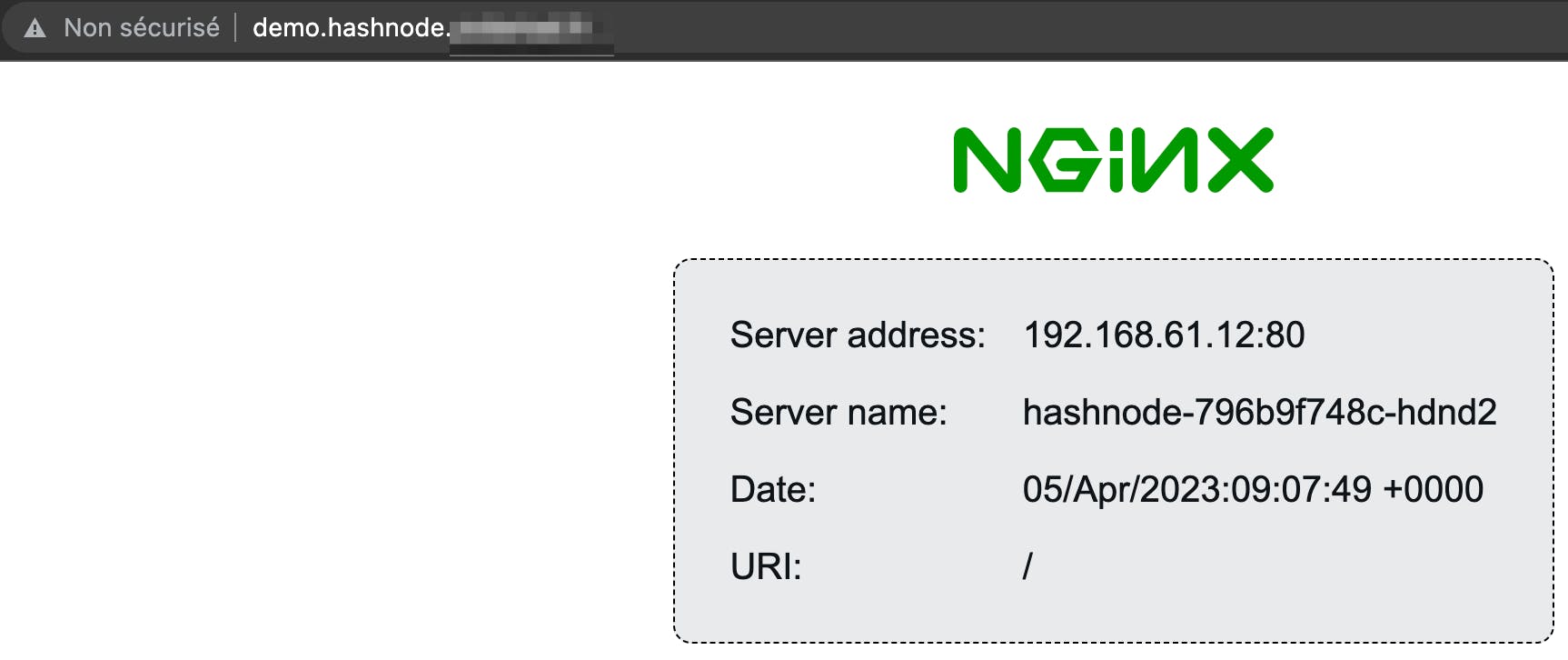

And now we can check that it's working: in a browser open a tab with the configured URL

Great, it works !! 🥳

Now, just to be sure, we can undeploy the application. external-dns will automatically remove the DNS entries as I configured the policy to sync

kubectl delete -f hashnode-demo.yaml -n hashnode

kubectl get pods -n hashnode # 0 pod in Running state

kubectl logs -f external-dns-688cf888c-6nvbx -n dns-management

Everything is ok ✅. Before concluding, a quick (partial) schema to summarize. I don't add all components as it will complexify the picture for few information

The described architecture regarding DNS is complex as it involves 2 services (Ionos & exoscale) but it helps to understand how things work. If you can, choose a service that can directly work with external-dns and host your dns name such as Google DNS for instance.

Finally, in conclusion, this article explains how to configure and use external-dns in a Kubernetes cluster. This can be useful when you want to manage dynamic environments, especially in a CICD pipeline and/or when we are using InfraAsCode to do some Continuous Deployment. Therefore, external-dns can create URLs for each environment and/or feature branch to ease the reviews for example. But this will be covered in another article... 😉

All sources are available in my gitlab repository.

FYI, this has also been tested on a cluster hosted on OVHCloud (plugged with exoDNS & Ionos).