Now that we have our cluster available on OVHcloud for instance (see my previous article if necessary) or if you already have an existing cluster on your own, let's deploy some components with Pulumi. Because you can deploy infrastructure with Pulumi, but also deploy some components/softwares in this infrastructure.

In this article, I'll show how to deploy components in a Kubernetes cluster in different manners: classic deployment or with the help of a Helm chart

Access your cluster

With Pulumi, you can connect to your cluster in two ways

Classic way : KUBECONFIG

In this manner, you just have to the environment variable KUBECONFIG and then Pulumi will use it by default.

To be sure that everything is ok, you can add some controls in your code to check if the variable is well set and if you are able to connect to the cluster with it

k8sVars := []string{os.Getenv(Kubeconfig)}

if slices.Contains(k8sVars, "") {

_ = ctx.Log.Error("❌ A mandatory variable is missing, "+

"check that all these variables are set: KUBECONFIG", nil)

return nil

}

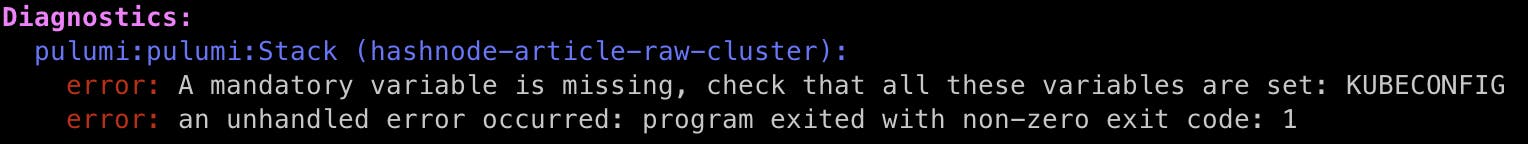

Then, when running pulumi preview or pulumi up, we get an error:

After that, we can check that the kubeconfig file is valid

cmd := exec.Command("kubectl", "get", "po")

cmdOutput := &bytes.Buffer{}

cmd.Stdout = cmdOutput

if errors.Is(cmd.Err, exec.ErrDot) {

cmd.Err = nil

}

if err := cmd.Run(); err != nil {

_ = ctx.Log.Error("❌ kubectl command failed, cannot communicate with cluster", nil)

}

ctx.Log.Info("✅ Connection to cluster validated", nil)

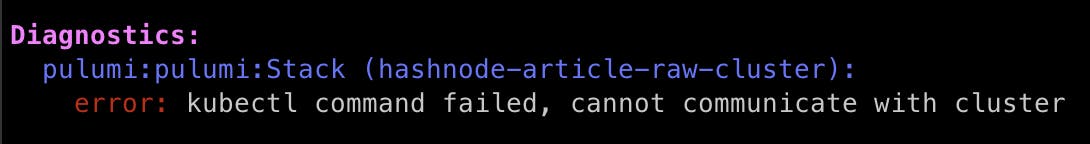

Same approach, we get an error if a default command kubectl get po command fails.

If everything goes fine, we are ready to deploy elements in our cluster !

Code way : with Pulumi

Pulumi has an "as-code" approach, so we can of course create our cluster from some code like I've explained in a another article.

Once your cluster is created, you can easily retrieve the information to be able to connect to it without having to set it from an environment variable like before. To do so, we need to create a Provider

First, we extract the kubeconfig information from our previously created cluster

k8sCluster, nodePool, err := initk8s(ctx, clusterIndex)

if err != nil {

return err

}

ctx.Export("kubeconfig", k8sCluster.Kubeconfig)

Then we can created our provider

k8sProvider, err := k8s.NewProvider(ctx, providerName,

&k8s.ProviderArgs{

Kubeconfig: k8sCluster.Kubeconfig,

},

pulumi.DependsOn([]pulumi.Resource{nodePool, k8sCluster}))

You can notice that we add a DependsOn information. This a mandatory information to give to Pulumi to tell it that it have to wait for the given resources to be correctly created before trying to create the provider.

We are ready, let's deploy first a Helm chart

Deploy a Helm chart

Pulumi provides many objects, methods to interact with Kubernetes and with Helm through the dedicated dependency : github.com/pulumi/pulumi-kubernetes/sdk/v4

Here we will deploy a Helm chart to enable Nginx-ingress-controler in our cluster as an exemple.

Helm works with values.yml to customize the way we deploy the Helm chart. So, we need first to create a Map to host our values. Here, in the example, we add some information about the controller behavior:

helmValues := pulumi.Map{

"controller": pulumi.Map{

"publishService": pulumi.Map{

"enabled": pulumi.Bool(true),

},

},

}

Then, to ease the lisibility, we can create a HelmChartInfo structure to host information regarding the chart to deploy

type HelmChartInfo struct {

name string

version string

url string

crds bool `default:"false"`

createNamespace bool `default:"false"`

values pulumi.Map

}

Now we simply have to create an instance of HelmChartInfo and create a new Release

// Init info about the chart

chart := HelmChartInfo {

name: "ingress-nginx",

version: "4.8.3",

url: "https://kubernetes.github.io/ingress-nginx",

createNamespace: true,

crds: true,

values: helmValues,

}

// Deploy it

helmRelease, err := helm.NewRelease(ctx, chart.name, &helm.ReleaseArgs{

Chart: pulumi.String(chart.name),

Namespace: pulumi.String(namespace),

CreateNamespace: pulumi.Bool(chart.createNamespace),

RepositoryOpts: &helm.RepositoryOptsArgs{

Repo: pulumi.String(chart.url),

},

SkipCrds: pulumi.Bool(chart.crds),

Version: pulumi.String(chart.version),

Values: chart.values,

})

Once we are done we can run pulumi commands

$ pulumi up -s raw-cluster

Previewing update (raw-cluster)

View in Browser (Ctrl+O): https://app.pulumi.com/yodamad/hashnode-article/raw-cluster/previews/...

Type Name Plan Info

+ pulumi:pulumi:Stack hashnode-article-raw-cluster create 1 message

+ └─ kubernetes:helm.sh/v3:Release ingress-nginx create

Diagnostics:

pulumi:pulumi:Stack (hashnode-article-raw-cluster):

✅ Connection to cluster validated

Resources:

+ 2 to create

Do you want to perform this update? yes

Updating (raw-cluster)

View in Browser (Ctrl+O): https://app.pulumi.com/yodamad/hashnode-article/raw-cluster/updates/3

Type Name Status Info

+ pulumi:pulumi:Stack hashnode-article-raw-cluster created (132s) 1 message

+ └─ kubernetes:helm.sh/v3:Release ingress-nginx created (128s)

Diagnostics:

pulumi:pulumi:Stack (hashnode-article-raw-cluster):

✅ Connection to cluster validated

Resources:

+ 2 created

Duration: 2m21s

We can check that everything is deployed

$ kubectel get po -n nginx-ingress-controller

NAME READY STATUS RESTARTS AGE

ingress-nginx-80ac5672-controller-79f4bcc7c5-8hf6t 1/1 Running 0 2m

Cool !

Note that if you are using the "code way" to create your cluster, you'll need to provide the Provider when creating the Helm Release

helmRelease, err := helmv3.NewRelease(ctx, chart.name, &helmv3.ReleaseArgs { ... }, pulumi.Provider(k8sProvider))

Helm is a quite common way to deploy components in a Kubernetes cluster. But sometimes, we may want to deploy elements more in a native way. Let's have a look.

Deploy some native elements

Again, Pulumi provides a quite complete support of Kubernetes basic components in its SDK. Here are some examples of components that are need to deploy external-dns in a cluster

external-dns is quite a good example as it requires many components to be fully deployed.

ServiceAccount

saArgs := k8score.ServiceAccountArgs{

Metadata: &k8smeta.ObjectMetaArgs{

Namespace: pulumi.String("external-dns"),

Name: pulumi.String("external-dns-sa"),

},

}

serviceAccount, err :=

k8score.NewServiceAccount(ctx, "external-dns-sa", &saArgs,

pulumi.DeleteBeforeReplace(true))

The structures are exactly the same as if we were defining them in a Yaml file. Pulumi use the Args pattern to describe the attributes of an element. So for instance to create a ServiceAccount, you need to define the ServiceAccountArgs fr the attributes and the metadata and then to invoke the NewServiceAccount function to create it.

Be careful about 2 things :

Pulumi has an auto-naming for the resources and add a generated ID in the name. This can be useless in some cases. You can override this behavior with the Args structure

Pulumi also do not delete by default the existing resources. You can force a recreation by adding the option

DeleteBeforeReplace

ClusterRole & ClusterRoleBinding

As for the ServiceAccount, we need to describe the components as in a Yaml file

roleBinding := &k8sauth.ClusterRoleBindingArgs{

Metadata: k8smeta.ObjectMetaArgs{

Name: pulumi.String("external-dns-cluster-role-binding"),

},

RoleRef: k8sauth.RoleRefArgs{

ApiGroup: pulumi.String("rbac.authorization.k8s.io"),

Kind: pulumi.String("ClusterRole"),

Name: pulumi.String("external-dns-cluster-role"),

},

Subjects: k8sauth.SubjectArray{

k8sauth.SubjectArgs{

Kind: pulumi.String("ServiceAccount"),

Name: pulumi.String("external-dns-cluster-role"),

Namespace: pulumi.String("external-dns"),

},

},

}

crb, err := k8sauth.NewClusterRoleBinding(ctx, "external-dns-cluster-role-binding", roleBinding, pulumi.DependsOn([]pulumi.Resource{serviceAccount}))

if err != nil {

return err

}

As ClusterRole is quite long, you can find it in the repository (link at the end) as it as no real value in the article : it is just a sequence of elements definitions.

Deployment

Finally, we can define our Deployment. Here you can see that we can go quite far in the definitions of the component. This is because Pulumi provides a full support of the Kubernetes manifest specifications

deployment := v1.DeploymentArgs{

Metadata: k8smeta.ObjectMetaArgs{

Name: pulumi.String("external-dns-cloudflare"),

Namespace: pulumi.String("external-dns"),

Labels: pulumi.StringMap{

"app.kubernetes.io/instance": pulumi.String("external-dns-cloudflare"),

"app.kubernetes.io/name": pulumi.String("external-dns"),

},

},

Spec: v1.DeploymentSpecArgs{

Selector: k8smeta.LabelSelectorArgs{

MatchLabels: pulumi.StringMap{

"app.kubernetes.io/instance": pulumi.String("external-dns-cloudflare"),

"app.kubernetes.io/name": pulumi.String("external-dns"),

},

},

Strategy: v1.DeploymentStrategyArgs{

Type: pulumi.String("Recreate"),

},

Template: k8score.PodTemplateSpecArgs{

Metadata: k8smeta.ObjectMetaArgs{

Labels: pulumi.StringMap{

"app.kubernetes.io/instance": pulumi.String("external-dns-cloudflare"),

"app.kubernetes.io/name": pulumi.String("external-dns"),

},

},

Spec: k8score.PodSpecArgs{

ServiceAccount: pulumi.String("external-dns-sa"),

Containers: k8score.ContainerArray{

k8score.ContainerArgs{

Name: pulumi.String("external-dns-cloudflare"),

Image: pulumi.String("registry.k8s.io/external-dns/external-dns:v0.14.0"),

Args: pulumi.StringArray{

pulumi.String("--source=ingress"),

pulumi.String("--domain-filter=grunty.uk"),

pulumi.String("--provider=cloudflare"),

pulumi.String("--txt-prefix=$(UID)-"),

pulumi.String("--txt-owner-id=$(UID)"),

},

Env: k8score.EnvVarArray{

k8score.EnvVarArgs{

Name: pulumi.String("CF_API_TOKEN"),

ValueFrom: k8score.EnvVarSourceArgs{

SecretKeyRef: k8score.SecretKeySelectorArgs{

Name: pulumi.String("cloudflare-api-token"),

Key: pulumi.String("api-key"),

},

},

},

k8score.EnvVarArgs{

Name: pulumi.String("CF_API_EMAIL"),

ValueFrom: k8score.EnvVarSourceArgs{

SecretKeyRef: k8score.SecretKeySelectorArgs{

Name: pulumi.String("cloudflare-user-mail"),

Key: pulumi.String("user-mail"),

},

},

},

k8score.EnvVarArgs{

Name: pulumi.String("UID"),

ValueFrom: k8score.EnvVarSourceArgs{

FieldRef: k8score.ObjectFieldSelectorArgs{

FieldPath: pulumi.String("spec.nodeName"),

},

},

},

},

},

},

},

},

},

}

_, err = v1.NewDeployment(ctx, "external-dns-deployment", &deployment, pulumi.DependsOn([]pulumi.Resource{crb}), pulumi.DeleteBeforeReplace(true))

if err != nil {

return err

}

Deploy it with Pulumi

Everything is defined, now we just have to run the classic Pulumi command : pulumi up

$ pulumi up -s raw-cluster -y

Previewing update (raw-cluster)

View in Browser (Ctrl+O): https://app.pulumi.com/yodamad/hashnode-article/raw-cluster/previews/...

Type Name Plan Info

+ pulumi:pulumi:Stack hashnode-article-raw-cluster create 1 message

+ ├─ kubernetes:core/v1:ServiceAccount external-dns-sa create

+ ├─ kubernetes:rbac.authorization.k8s.io/v1:ClusterRoleBinding external-dns-cluster-role-binding create

+ ├─ kubernetes:rbac.authorization.k8s.io/v1:ClusterRole external-dns-cluster-role create

+ └─ kubernetes:apps/v1:Deployment external-dns-deployment create

Diagnostics:

pulumi:pulumi:Stack (hashnode-article-raw-cluster):

✅ Connection to cluster validated

Resources:

+ 6 to create

Updating (raw-cluster)

View in Browser (Ctrl+O): https://app.pulumi.com/yodamad/hashnode-article/raw-cluster/updates/9

Type Name Status Info

+ pulumi:pulumi:Stack hashnode-article-raw-cluster created (131s) 1 message

+ ├─ kubernetes:core/v1:ServiceAccount external-dns-sa created (0.45s)

+ ├─ kubernetes:rbac.authorization.k8s.io/v1:ClusterRoleBinding external-dns-cluster-role-binding created (1s)

+ ├─ kubernetes:rbac.authorization.k8s.io/v1:ClusterRole external-dns-cluster-role created (1s)

+ └─ kubernetes:apps/v1:Deployment external-dns-deployment created (2s)

Diagnostics:

pulumi:pulumi:Stack (hashnode-article-raw-cluster):

✅ Connection to cluster validated

Resources:

+ 6 created

Duration: 2m21s

Conclusion

In this quick article, we have discover that Pulumi is not only for Infrastructure. You can also easily deploy some components in a Kubernetes cluster with a high level of customization as if you were doing it in a more classic way, the Yaml way !

The complete code is available in this repository

At the time this article was written:

Pulumi CLI & SDK : v3.112

Pulumi Kubernetes SDK : 4.9.1

Thanks to OVHcloud for the support to test all these things.